How to setup Celery with Django?

Celery is an asynchronous task framework that can be used to perform long-running jobs required in the Django web application

The TLDR answer:

Highly recommend reading the detailed answer mentioned below before you jump into implementation details since there are a lot of conceptual facts.

We are going to use docker to set up all the dependencies, that way you don't have to struggle with different environments. Here are the steps to integrate Celery with the Django web application.

- Messaging broker - Celery gives an option to integrate with a number of messaging brokers like Redis, RabitMQ, etc. I prefer using Redis. Here is Redis service section example in

docker-compose.yaml

redis_server:

image: redis:6.0.9

container_name: redis_server

ports:

- 6379:6379

command: redis-server

volumes:

- $PWD/redis-data:/var/lib/redis

- $PWD/redis.conf:/usr/local/etc/redis/redis.conf

- We shall use Redis as a messaging broker. Since Celery supports Redis out of the box, you don't have to do anything major apart from the basic configuration.

- In the first step, we have to install celery using pip,

pip install celery - Create a new file

celery.pyin the same folder where yoursettings.pyfile lies

import os

from celery import Celery

celery_settings_value = "<project name>.settings"

# change <project name> with folder name where your settings.py file is present.

os.environ.setdefault("DJANGO_SETTINGS_MODULE", celery_settings_value)

app = Celery("<project name>")

# change <project name> with folder name where your settings.py file is present.

app.config_from_object("django.conf:settings", namespace="CELERY")

app.autodiscover_tasks()

task = app.task

@app.task(bind=True)

def debug_task(self, data):

print(data)

- Next, you have to modify the

settings.pyfile. Add the below config. Do make sure to update theREDIS_HOSTandREDIS_PORTwith your Redis server connection details.

# Celery connection details for local

REDIS_HOST = 'redis_server'

REDIS_PORT = 6379

CELERY_BROKER_URL = f"redis://{REDIS_HOST}:{REDIS_PORT}"

CELERY_RESULT_BACKEND = f"redis://{REDIS_HOST}:{REDIS_PORT}"

# Replace REDIS_HOST and REDIS_PORT with server connection details.- In the above steps, you configured the celery service to work with Redis and Django. Since celery is an Asynchronous task framework, you would need to create a new process to run the celery service, independent of Django. The Django application and Celery would communicate about all the tasks using Messaging Broker, which in our case is Redis.

- If you are using Django using docker. Then you have to create one more service similar to the Django service but here the command would be a little different. If you are not using Docker then you need to open a new shell and run the following command

celery -A backend worker -l info

celery:

build: ./backend

command: celery -A backend worker -l info

volumes:

- ./backend/:/app/

env_file:

- ./.env

depends_on:

- postgresql_db

- redis_server- Now you have your celery service up and running.

How can you test celery?

- Go to Django shell and call the task, you would see the task output printed in the shell terminal where you initiated Celery

from backend.celery import debug_task

debug_task.delay("this is async call")For a quick example of how the final Celery integration with Django looks check my example repo.

The detailed answer

When we develop web applications we realize that there are going to be featured/tasks that need a lot of time to execute, for example, upload a CSV file and then process the data to perform certain analysis, or you have to deal with third-party APIs to perform certain tasks like, sending an email.

When such requirements come we can definitely build an API to build such features but soon we realize that these API are becoming a bottleneck to our system scalability. For example, we have an API to send emails, and the email service is taking a long time to respond, so our service would down come down crumbling due to issues that are not in our control. So what is an ideal approach to solving such problems?

Use Messaging Queue based Asynchronous Task Framework

- Celery

I mentioned a lot of Jargons, so let us try to understand in simple layman terms what it means? Let us say when any user signs up on your platform, you have to send 1 welcome email and also notify your sales team by creating an entry to an external CRM platform you use. You can do all these while keeping the user waiting for the response, or else you can create a new task while you return back the response to the client. The former solution is a better approach due to its decoupling and scalability features.

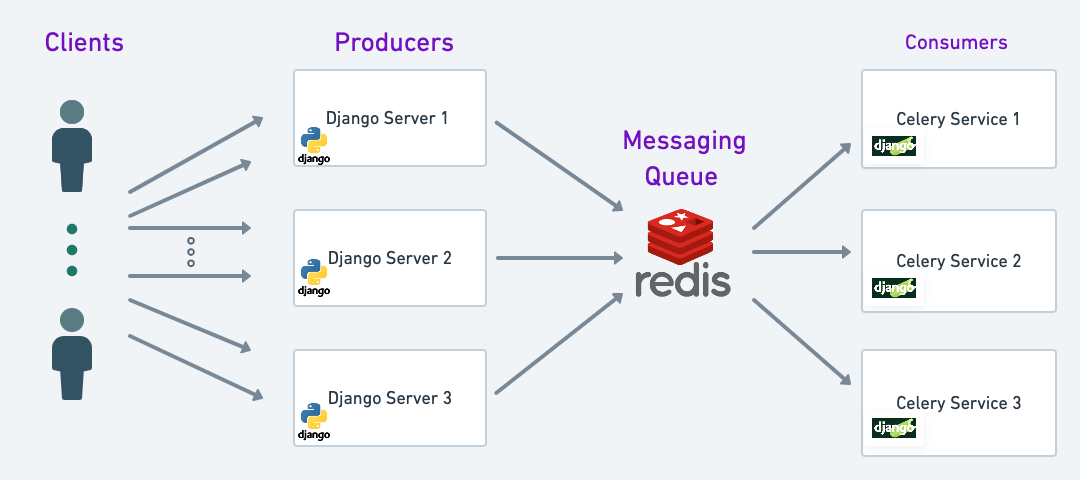

Whenever a client makes a request to a Django application that needs to perform a long-running operation or needs to call an external API, then Django would create a new entry in the Redis. Celery keeps on listening to the Redis queue to check if there is any new entry or not, if there is any new entry then it would pick the task and start executing it. Here are the main terminologies,

- Clients - Any user or any device who is making a request

- Producer - Django server who creates any asynchronous task

- Messaging queue- Redis service which will keep a track of all the tasks which needs to be performed

- Consumers - Celery services will pick tasks from the Redis queue and execute them. When a task is picked by any celery service, then the given task is marked as acknowledged so that no other celery picks it, after it is successfully executed then it will send a signal to Redis to delete the given task so that it is no more executed. If the celery task fails to execute then it is pushed back to the queue (you need to add do some additional configuration for this feature)

Since it is a queue, so any new task created by Django would always reside at the bottom of the queue and Celery consumers would pick tasks from the top of the queue. In short, execution follows FIFO.

Now that you have a rough idea of how it works. Let's look at when you should use celery tasks.

- Whenever you have to interact with third-party services, which are not under your control. For example, you want to send an email, OTP, etc.

- You have a heavy data processing feature that might take a long time, for example, you want to run an image recognization model over an image user has uploaded.

- You want to run multiple operations which are not super important and can be retried later if it fails. For example, when a user signs up, you need to create multiple entries in 5-6 different tables, this operation can take a lot of time, and at times if anyone entry creation fails then your user might see a failure, so it's better to relegate the operation to celery, so that if anything fails then you can retry it without impacting the user journey.

Here is the official documentation on how you can setup Celery with Django application